How to Use Incidents

Why Use Incidents in Sifflet?

When a data quality issue arises, the investigation process can be chaotic. Alerts fire, stakeholders need updates, and team members scramble to find the root cause. Sifflet's incidents are designed to bring order to this process.

An incident acts as a central hub for resolving a data problem, from initial detection to final resolution. It streamlines your entire issue resolution process by:

- Consolidating Related Alerts: Automatically grouping multiple related failures into a single, manageable issue.

- Providing Rich Context: Bringing together data lineage for root cause analysis and downstream impact reports in one place.

- Facilitating Collaboration: Giving your team a dedicated space to assign ownership, track progress, and communicate findings.

By managing data issues as incidents, your team can collaborate more effectively, reduce time-to-resolution, and build a reliable history of your data health.

The Incident Resolution Workflow

You've received an alert: a monitor has failed, and Sifflet has created an incident. This guide walks you through the typical workflow for investigating, collaborating on, and resolving data incidents using the Sifflet platform.

Step 1: Triage and Assign Ownership

Your first step is to assess the incident and assign it to the right person or team for investigation.

On the main Incidents page, you'll see a high-level overview. Click on the new incident to open the incident detail page.

-

Review the Incident: Quickly look at the monitor(s) that failed and the impacted data asset(s). If Sifflet has automatically grouped multiple failures, you'll see them listed. You can also read the AI-generated description for a quick summary.

-

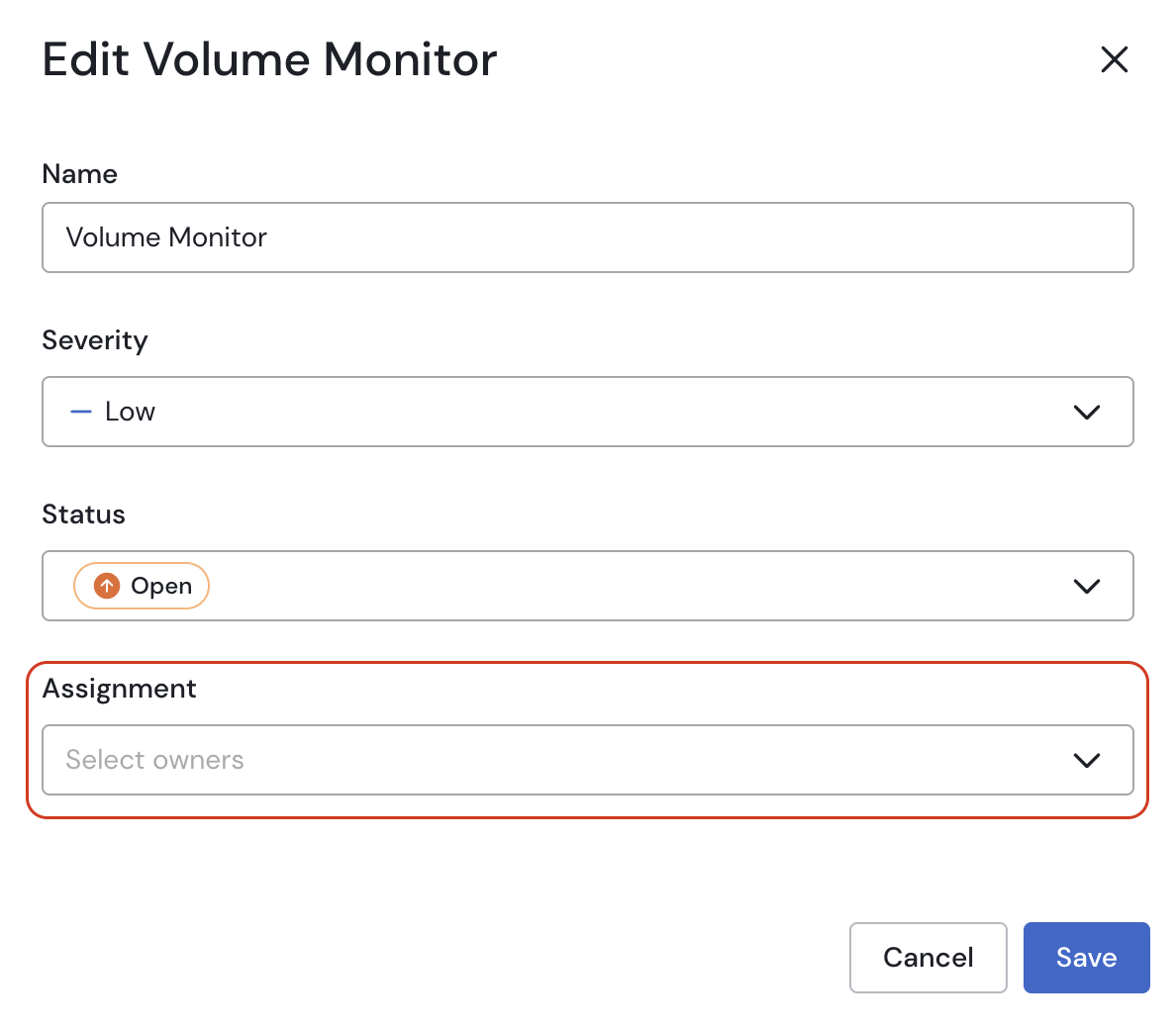

Assign an Owner: In the top right corner, use the "Assignee" dropdown to assign the incident. This ensures clear ownership and notifies the team member via email that they are responsible. Assigned incidents will also appear on that user's personal dashboard.

Assigning an incident owner

Step 2: Investigate the Root Cause and Impact

Now, it's time to dig in and understand what went wrong and what the impact is.

- Find the Root Cause with Lineage: Navigate to the Lineage tab. This is your most powerful tool for troubleshooting. The lineage view provides an end-to-end map of your data pipelines. Look upstream from the affected asset(s) to identify potential root causes—perhaps a transformation job failed, or an upstream table has its own quality issues.

- Understand the Business Impact: Go back to the Overview tab and look at the Impacted Downstream Assets section. This list shows you all the dashboards, reports, and other data assets that depend on the one(s) with the problem. This is critical for understanding the business impact and prioritizing your response.

Step 3: Collaborate and Communicate

Keep your team and stakeholders informed throughout the process.

- Alert Impacted Teams: Based on the downstream assets you identified, proactively notify the owners of those assets (e.g., the analytics team) that the data they rely on may be compromised.

- Keep a Record in the Timeline: Use the Timeline section on the Overview page to document your investigation. Leave comments on your findings, actions taken, and hypotheses. This creates a clear audit trail for anyone else who joins the investigation and is invaluable for future post-mortems.

Step 4: Resolve and Close the Incident

Once you've fixed the underlying issue or completed your investigation, the final step is to formally close the incident in Sifflet.

When you close an incident, you provide a resolution status that explains the outcome. This action also involves deciding whether the underlying monitor(s) that triggered the incident should be marked as "Passing" for this specific run.

-

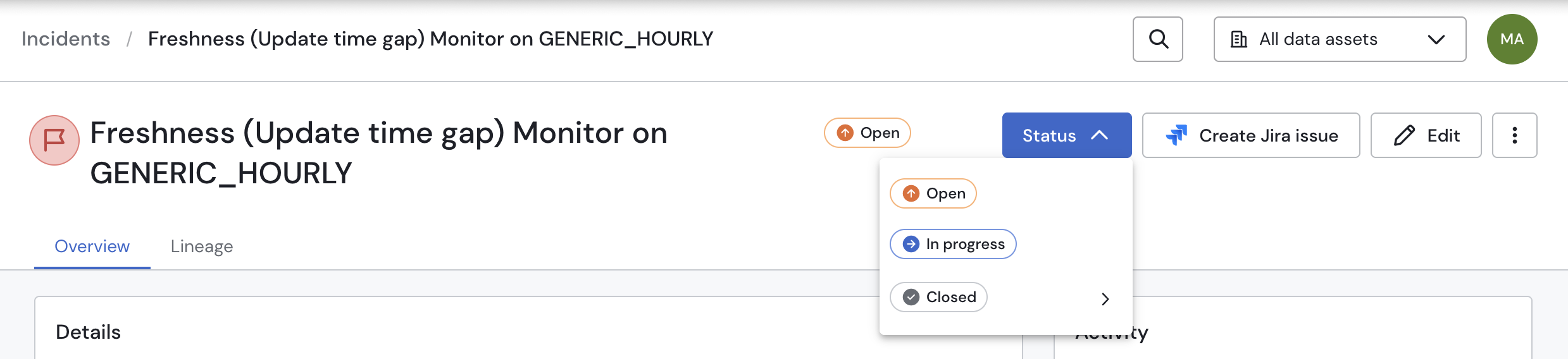

Update the Status: In the top-right corner of the page, click the status dropdown to see the resolution options.

Updating the incident status

-

Choose a Resolution Status: The statuses are grouped into two main categories:

- Mark Monitors as Passing: Choose an option from this category if the monitor's alert was not indicative of a real data quality failure. This will update the incident's status and mark the associated monitor run(s) as "Passing" in their history.

- Reviewed: A general-purpose status indicating you have reviewed the alert and concluded it doesn't represent a true failure.

- No action needed / Known error: Use this if the alert is for a known issue that is accepted and requires no immediate action.

- False positive / Expected: The best choice when a monitor has fired incorrectly (a false positive) or the underlying data behavior, while unusual, was expected (e.g., due to a holiday or planned event).

- Do Not Mark Monitors as Passing: Choose an option from this category if the monitor correctly identified a real data quality issue. The incident will be closed, but the monitor's history will continue to reflect that it failed on this run.

- Fixed: The most common status. Use this when the monitor correctly detected an issue, and your team has successfully resolved the underlying problem.

- Reviewed: A general-purpose status to indicate you've acknowledged the alert as a valid failure, even if no action was taken.

- No action needed / Known error: Select this if the alert was for a real, known issue, but you have decided not to take action at this time.

- False positive / Expected: Use this if you consider the alert a false positive but do not wish to alter the monitor's historical run status from "Failed" to "Passing."

- Duplicate: Choose this if this incident is a duplicate of another existing incident that is already being tracked.

- Mark Monitors as Passing: Choose an option from this category if the monitor's alert was not indicative of a real data quality failure. This will update the incident's status and mark the associated monitor run(s) as "Passing" in their history.

Updated 4 months ago