Incident Page Filtering Enhancements

by Gabriela RomeroWe’ve improved filtering on the Incident page to make it easier to identify, prioritize, and review incidents efficiently.

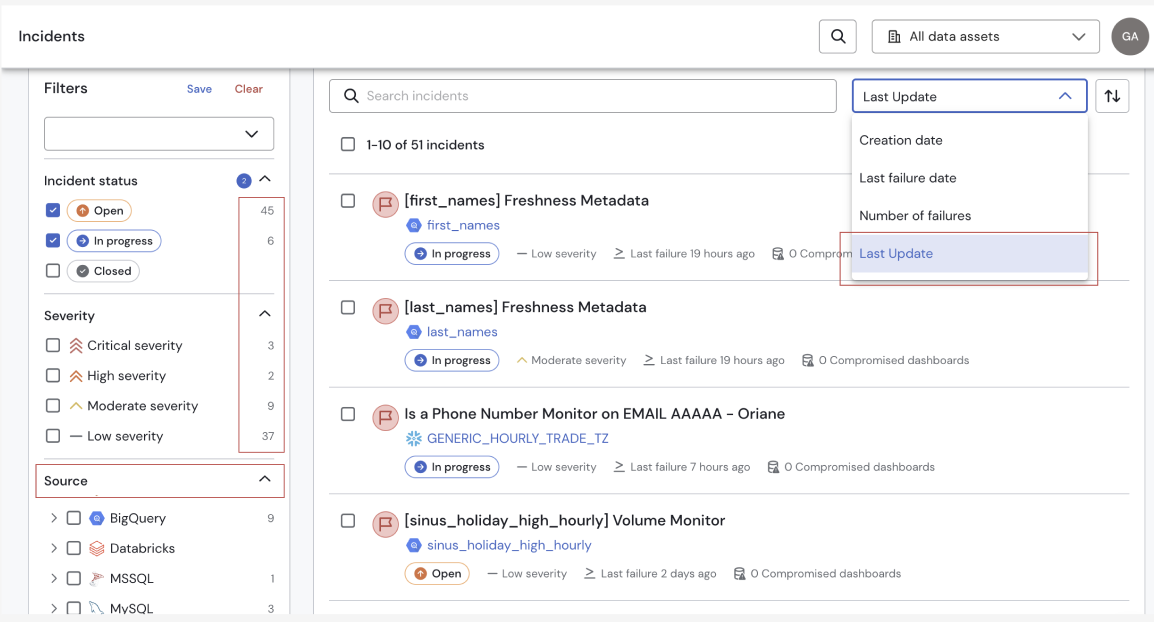

Incident Count per Filter

Each filter now displays the number of associated incidents .This provides a quicker overview of impact before applying filters.

Data Source Filter Added

A new “Data Source” filter is now available in the Incident tab, allowing users to refine incidents based on their data source.

“Last Updated” Filter

We added a “Last Updated” filter to allow users to sort incidents by their most recent update event, whether triggered by a user or automatically by the system.