Sensitivity

Overview

Sensitivity of a Monitor is a parameter that defines the level of variation an anomaly needs to be for a system to throw an error. The right level of sensitivity is crucial for maintaining data integrity and reliability. Lower thresholds increase sensitivity, detecting smaller deviations but potentially increasing false positives.

How to

Where to find it

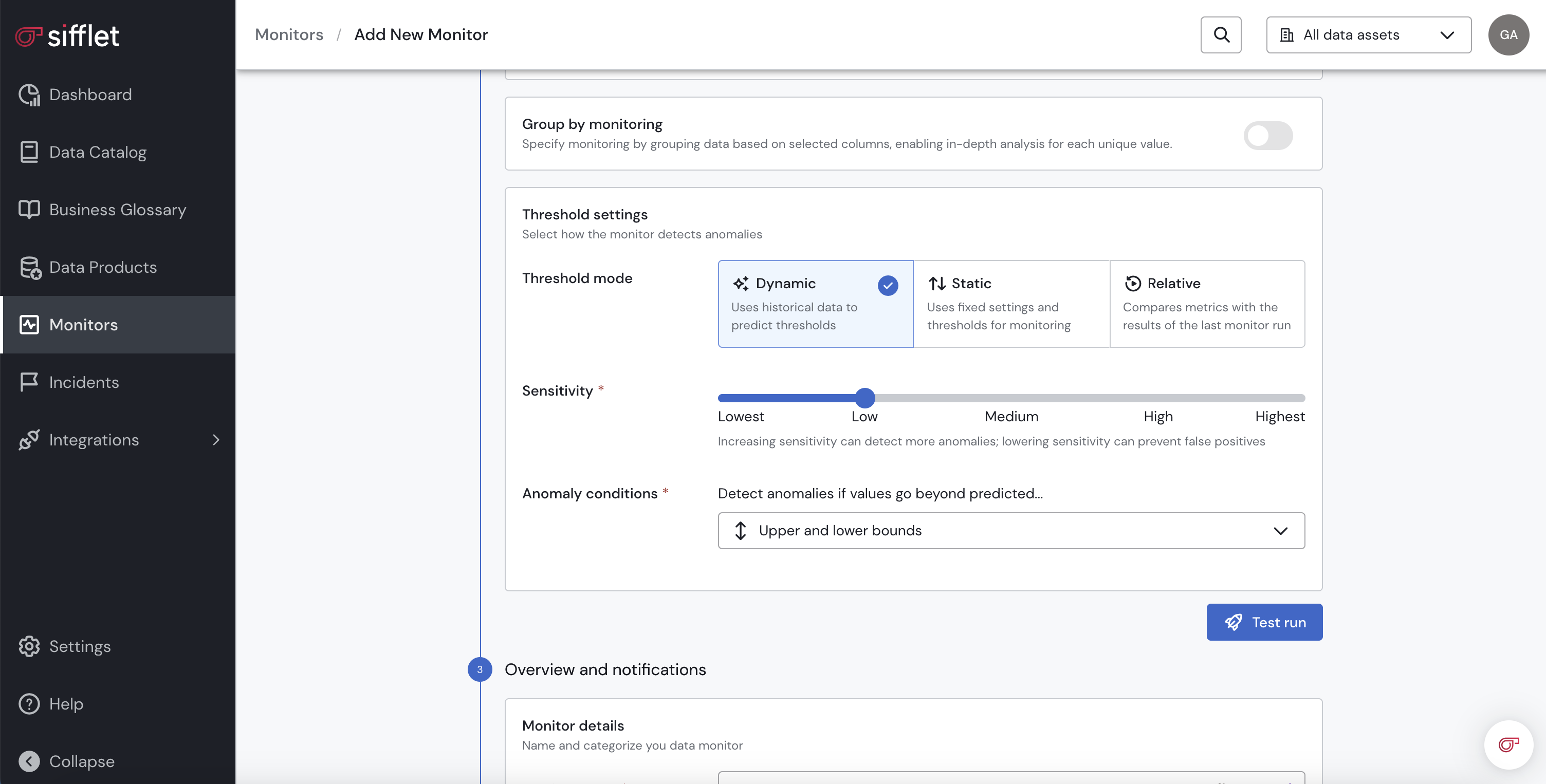

Monitor Sensitivity parameter setting is available in the Sifflet Monitor Wizard.

How to use it

Choosing the right sensitivity can help you align the number of alerts to the importance of your data. If you want to monitor only significant events and not smaller movements, you might want to lower the sensitivity to avoid receiving false positive alerts. On the contrary, if any slight change in your data is critical, you might want to increase the sensitivity to capture it.

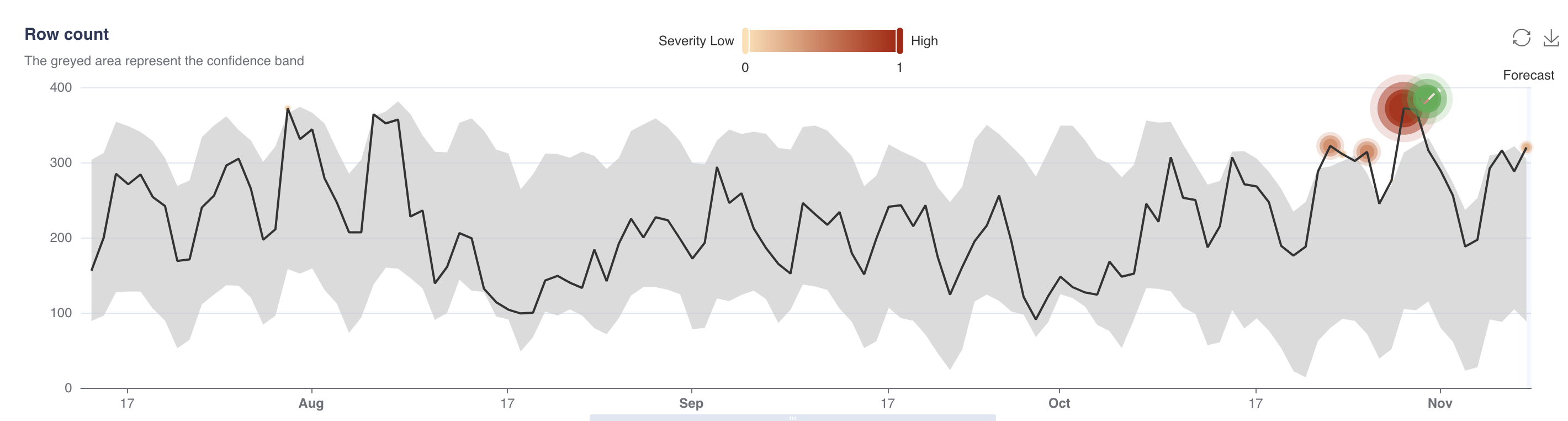

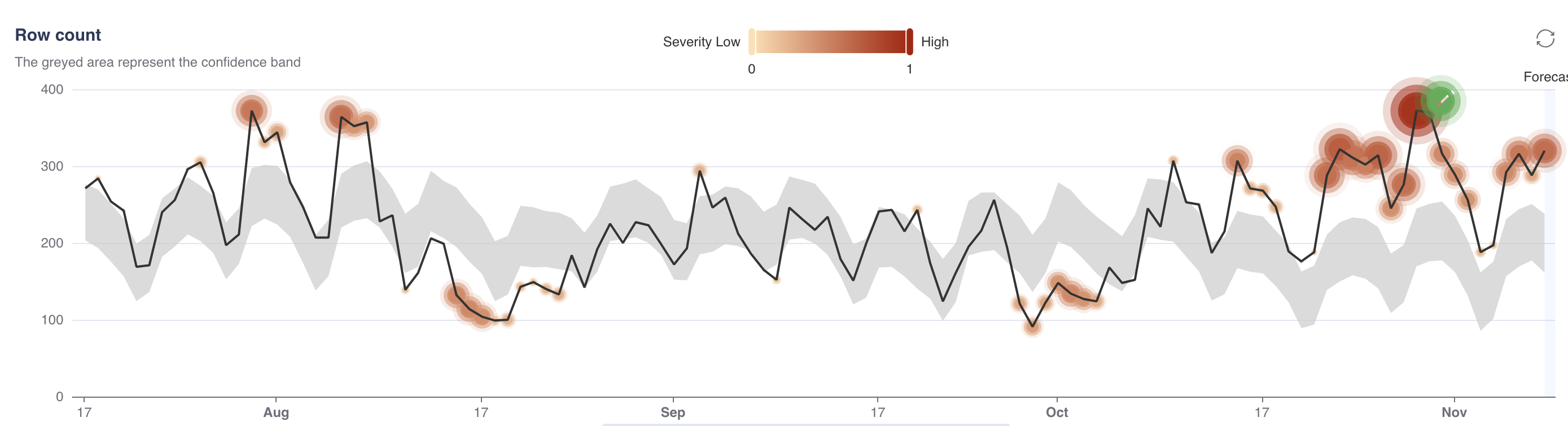

Illustrations below show how increased Sensitivity level increases the number of Data Quality issues detected.

Sensitivity: Low

Sensitivity: High

Best Practices

We recommend setting the model sensitivity accordingly to your data criticality.

| Data Criticality | Recommended Sensitivity |

|---|---|

| High | High |

| Normal | Normal |

| Low | Low |

Updated 3 months ago