dbt Core

You can integrate Sifflet with dbt Core by sending the following dbt artifacts to Sifflet whenever you execute a dbt run, dbt test, or dbt build command:

The main steps to activate the integration and benefit from its features are the following:

- Create a dbt Core source in Sifflet

- Adapt your dbt workflow

- Send the dbt artifacts to Sifflet

1. Create a dbt Core source in Sifflet

- Access the "New source" page and then choose dbt Core.

- Provide the required parameters:

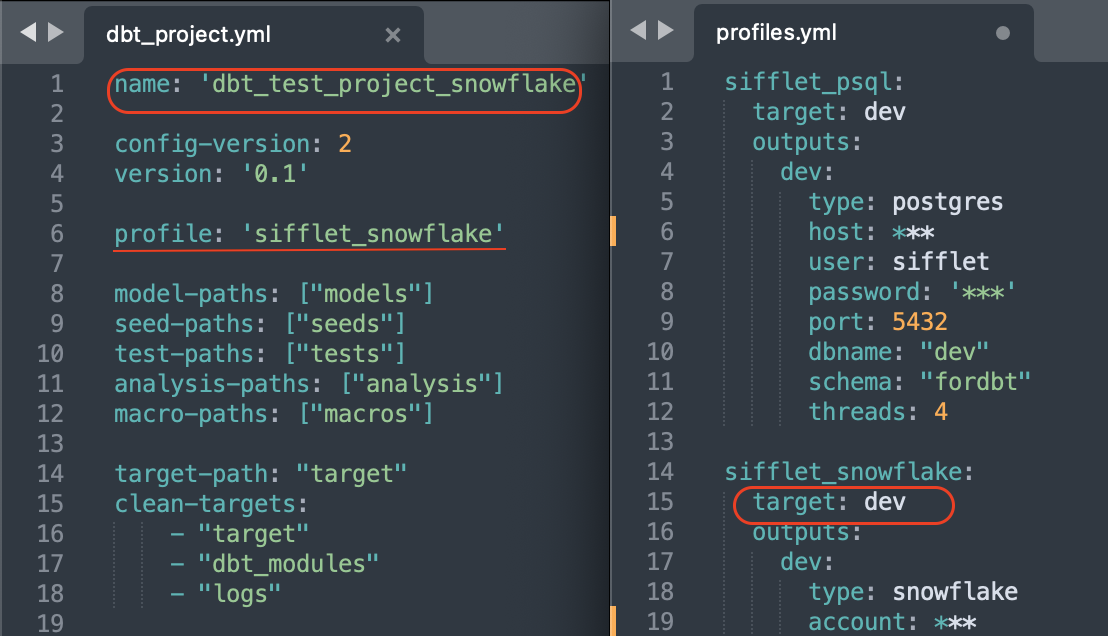

Name: Choose a name for your dbt Core source (the name that will be used to reference the source in Sifflet)Project Name: The name of your dbt project (you can find it in yourdbt_project.ymlfile)Target: The name of the target used to run your project (defined in yourprofiles.ymlfile)

- Configure desired alerting channels for model failures. (More on pipeline alerting.)

Case sensitivityAs dbt is case sensitive, when adding the Project Name and Target in the parameters make sure that they match exactly with the names in your dbt project.

the profile is "sifflet_snowflake", hence the target is dev

2. Adapt your dbt workflow

In your pipelines, whenever you use a dbt command (dbt build, dbt run, or dbt test) to execute your dbt project or parts of it, you need to adapt your workflow by adding a step that sends the dbt artifacts to Sifflet. To send the artifacts, multiple methods are provided in step 3 below.

dbt artifacts overwrite the output of previous executionsWhen you run a dbt execution command (

dbt build,dbt run, ordbt test) on specific models via a selector (see docs ), the generated artifacts overwrite any existing artifacts from previous runs, even if the previous runs executed other models.Therefore, in order to get the complete project metadata in Sifflet, you have to adapt your dbt workflow by adding steps to send the dbt artifacts to Sifflet after the execution of every dbt command.

Sending artifacts for both dbt run and dbt test

dbt run and dbt testIf you separate between the execution of your dbt models and their tests, you need to send the dbt artifacts to Sifflet after the execution of each command. This means that the expected order of steps would be the following:

dbt run- Sending the artifacts to Sifflet

dbt test- Sending the artifacts to Sifflet

This ensures that Sifflet has the full metadata of your dbt execution.

Ensure that you're sending the artifacts after every commandIf you use multiple

dbt runcommands within your pipeline, you need to send the artifacts to Sifflet after the execution of every command (irrespective of the number of models executed by the command):

dbt run --select model_1- Sending the artifacts to Sifflet to retrieve metadata about the status of

model_1dbt run --select model_2 model_3- Sending the artifacts to Sifflet to retrieve metadata about the status of

model_2andmodel_3dbt tests- Sending the artifacts to Sifflet to retrieve metadata about the output of dbt tests

Sending the artifacts for dbt build

dbt buildIf you use the dbt build command, you simply need to send the artifacts to Sifflet after the command's execution. However, if you split the execution of your models via multiple dbt build commands, you need to send the artifacts to Sifflet after the execution of every dbt build command.

3. Send the dbt artifacts to Sifflet

In order to send your project's dbt artifacts to Sifflet programmatically, you need to perform the following steps:

- Generate a Sifflet API Token with the

EditororAdminrole (see here for more details). - Send the dbt artifacts to Sifflet using one of the following options:

- SiffletDbtIngestOperator operator

- The Sifflet CLI

- The Sifflet API

Using the Sifflet CLIWith the API token generated previously, you can find how to configure the Sifflet CLI here.

SiffletDbtIngestOperator operator

If you use Airflow as your orchestrator, you can use the SiffletDbtIngestOperator operator to send dbt artifacts to Sifflet. The operator takes the following parameters:

task_id: Unique identifier of the task.input_folder: Directory where the dbt artifacts can be found.target: The name of the target used to run your project (defined in yourprofiles.ymlfile).project_name: The name of your dbt project (available in yourdbt_project.ymlfile).

sifflet_dbt_ingest = SiffletDbtIngestOperator(

task_id="sifflet_dbt_ingest",

input_folder=<DBT_PROJ_DIR>,

target=<DBT_TARGET>,

project_name=<DBT_PROJECT_NAME>,

)Sifflet's CLI

You can also send the dbt artifacts with Sifflet's CLI. With the API token generated previously, you can find how to configure the CLI here.

Please refer to the sifflet ingest dbt command line reference for the usage documentation.

Sifflet's API

Another option is to add the below script directly to your pipeline:

#!/bin/bash

accessToken="<INSERT_ACCESS_TOKEN>"

projectName='<project_name>'

target='<target>'

curl -v "https://<tenant_name>.siffletdata.com/api/v1/metadata/dbt/$projectName/$target" \

-F 'manifest=@target/manifest.json' \

-F 'run_results=@target/run_results.json' \

-H "Authorization: Bearer $accessToken"accessToken: The Sifflet API token you previously generated and stored.projectName: The name of your dbt project (available in yourdbt_project.ymlfile).target: The name of the target used to run your project (defined in yourprofiles.ymlfile).tenant_name: The name of your Sifflet instance. If the address of your Sifflet instance is<https://abcdef.siffletdata.com>, then your tenant would beabcdef.

FAQ

Do I need to send both manifest.json and run_results.json every time I run a dbt command?

manifest.json and run_results.json every time I run a dbt command?No, you can send the manifest.json file just once, but you have to send the run_results.json artifact whenever you run a dbt command.

If you have different dbt commands throughout your pipeline, you can send the manifest.json file at any point of the pipeline's execution, but make sure to send the run_results.json file generated after every command execution.

The dbt tests listed in the monitor page do not show any status or last run information

If the dbt tests listed in the monitor page do not contain information about the status of the last run, it is likely due to Sifflet not receiving the necessary metadata/artifacts. Please ensure that:

- You're running the dbt commands in the right order based on your workflow.

- You're sending the dbt artifacts to Sifflet after every execution of a

dbt build,dbt run, ordbt testcommand.

For more information, refer to step 2 above.

Updated 3 months ago