Introducing Our Revamped Lineage: Deeper Insights, Cleaner Experience

by Mahdi KarabibenWe're excited to announce a complete overhaul of Sifflet's lineage! We've rebuilt the entire experience from the ground up to make it easier to digest, more powerful, and far more intuitive to navigate.

This revamp provides a cleaner interface that surfaces more metadata, giving you the full context you need without the clutter.

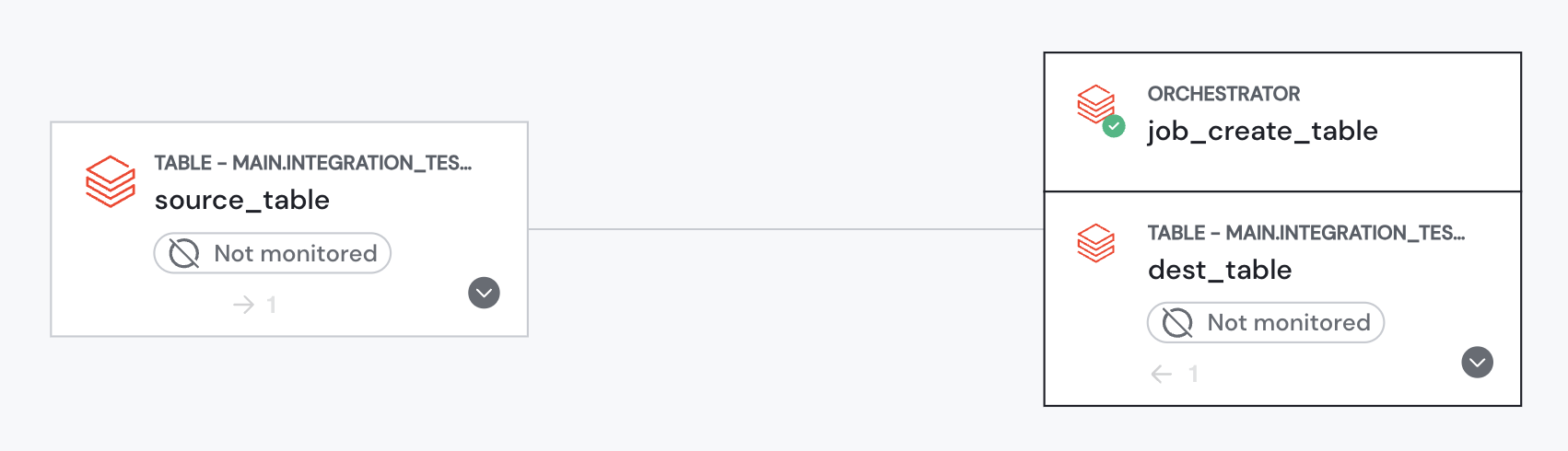

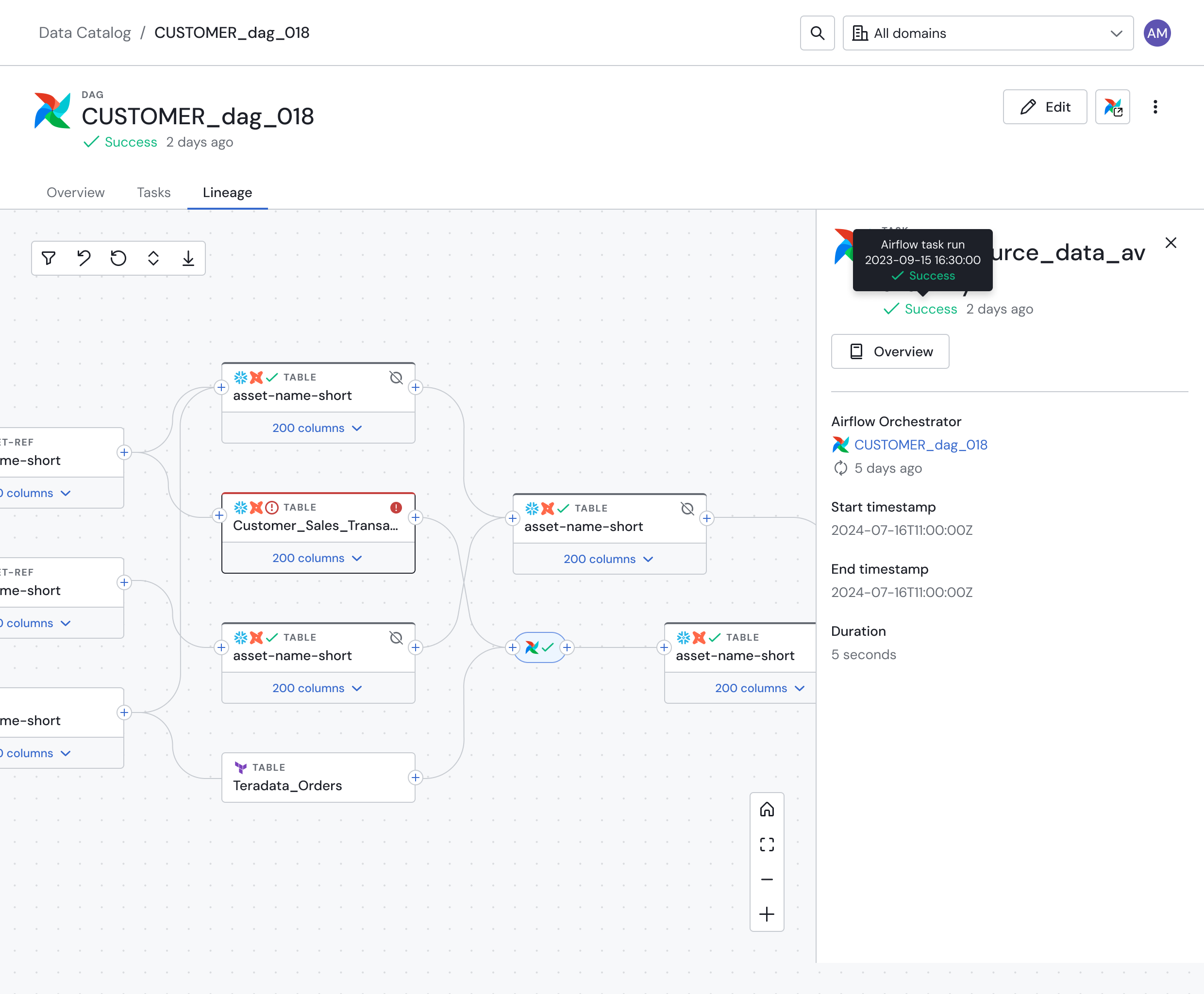

The new lineage graph.

What’s New?

- 📈 Easier to Digest, Yet More Powerful: The new UI is designed for clarity. We’ve streamlined the view so you can understand data flow at a glance, while simultaneously surfacing richer metadata for deeper analysis.

- 🔄 New "Transformation" Nodes: You can now visualize pipeline steps directly within your lineage graph. This includes new, dedicated nodes for processes like Airflow DAGs, giving you a true end-to-end picture of how your data is modified.

- 🧭 Better Navigation for Complex Graphs: We know lineage can get complicated. The new graph comes with enhanced controls for panning, zooming, and exploring, making it simple to navigate even the most complex data ecosystems.

How to Access It

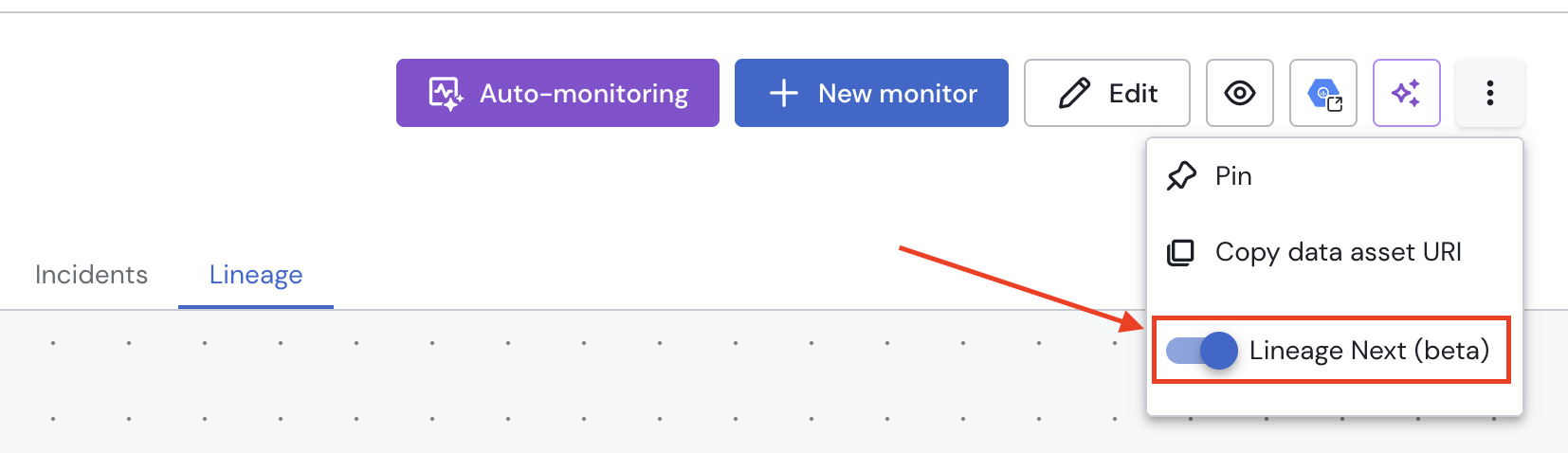

You have full control. You can switch between the classic view and the new revamped lineage using a dedicated toggle on the asset and incident pages.

The toggle to activate the new lineage experience.

Coming Soon

We're already working on the next enhancements: collapsible and expandable BI lineage nodes and data product lineage. This will give you even more control to simplify your view and focus on the assets that matter most.